LLM & Generative AI: LLMs, the ‘Generative AI technology behind ChatGPT, are remarkable in their understanding of human language and their ability to generate near human responses in language (image models generate images). In the span of one year, they have become ubiquitous with use cases that are wide spread across many personal and business situations. Currently, every business is either incorporating or planning to incorporate the LLMs (image models) in their operations and strategic activities.

Problems with LLMs:

LLMs, however, have their pitfalls and limitations! Notable among the flaws is that LLM generated outputs are not reliable nor accurate – problem of ‘hallucination’. As a result, LLMs produce outputs that are inconsistent i.e. for the same inputs at different times LLMs produce different and possibly incorrect outputs. Business solutions that are built on LLMs that do not have adequate verification steps are themselves prone to inaccurate outputs, rendering the business solutions impractical.

Enhancing Language Models:

LLMs goal is to extend the pattern of each input they are given with their generated output based on statistically significant matches from the enormous volumes of data they were trained on. This is why LLMs are called ‘stochastic parrots’! The other shortcoming of LLMs is that they are static as the breadth of their answers is limited to the training data, hence leaving out the data that became available after the training date, resulting in incorrect factual outputs. LLMs also fall short in domain specific tasks which are necessary for the business use cases that are of high value where the answers to questions are expected to be correct and constrained to the domain – expert answers. There have been recent developments that augment LLMs with techniques such as RAG – Retrieval Augmented Generation together with Vector databases, which extend the context window while supplying domain specific content to the LLM, resulting in improved performance of LLMs. Yet the outputs suffer inaccuracies and inconsistencies (to be discussed in a future blog)

LLMs are also not adept in reasoning along with other short comings such as biases, cost of training and unclean training data, etc. (to be dealt in future blogs)

With this in the backdrop, the focus has since shifted to making up for the problems with LLMs like ‘hallucinations’.

There is a practical solution to the problems and inadequacies of LLMs, Knowledge Graphs.

Overcoming Limitations: How Knowledge Graphs Enhance Business Solutions:

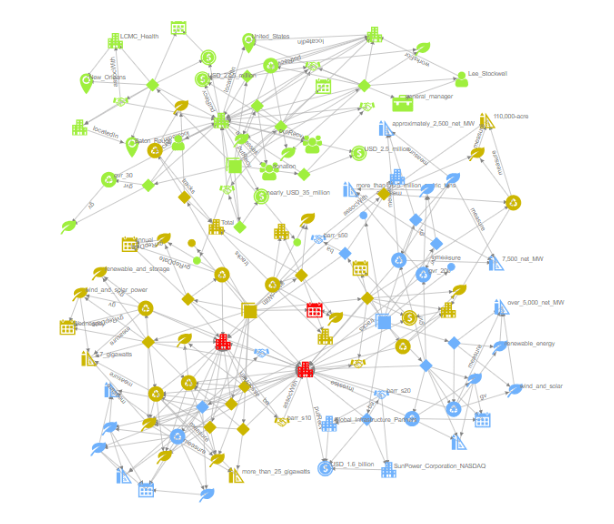

Knowledge Graphs offer curated information organized in a graph structure with entities (people, places, things, etc) as nodes, and the relationships between them as edges. Relationships expressed in a knowledge graph uncover newer facts and lead to valuable insights. Knowledge Graphs being domain specific house expert knowledge necessary to support correct and consistent answers targeting the domain. These and other features of Knowledge Graphs directly address the critical flaws that hamper LLMs’ wider adoption in business-critical solutions.

Recently, it has become known that Knowledge Graphs deployed together solve the deficiencies of LLMs and LLMs enhance the Knowledge Graphs by adding natural language querying (details in a future blog). TextDistil, one such language and knowledge solution, is an innovative end-to-end language processing pipeline that combines both LLM and Knowledge Graph technology. TextDistil pipeline enables domain specific ‘no code’ Knowledge Graph generation from input text and exposes a natural language open-text query interface to the raw text producing answers that have provenance links back to the raw text input.

Lead Semantics conducted an expert panel and a workshop on “Convergence of Large Language Models and Knowledge Graphs“, at the PRICAI – Pacific Rim International Conference on Artificial Intelligence, November, 2023, in Jakarta, Indonesia.